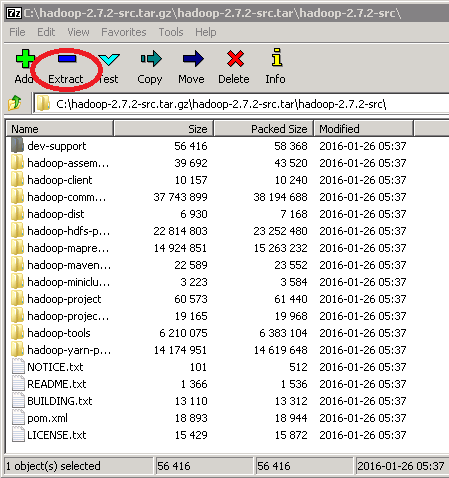

Quick Start Guide for Deeplearning. This is everything you need to run DL4. J examples and begin your own projects. We recommend that you join our Gitter Live Chat. Gitter is where you can request help and give feedback, but please do use this guide before asking questions weve answered below. If you are new to deep learning, weve included a road map for beginners with links to courses, readings and other resources. If you need an end to end tutorial to get started including setup, then please go to our getting started. A Taste of Code. Deeplearning. Everything starts with a Multi. Layer. Configuration, which organizes those layers and their hyperparameters. Hyperparameters are variables that determine how a neural network learns. They include how many times to update the weights of the model, how to initialize those weights, which activation function to attach to the nodes, which optimization algorithm to use, and how fast the model should learn. This is what one configuration would look like Multi. Layer. Configurationconfnew. Neural. Net. Configuration. Builder. iterations1. InitWeight. Init. XAVIER. activationrelu. AlgoOptimization. Algorithm. STOCHASTICGRADIENTDESCENT. Rate0. 0. 5. With Deeplearning. Neural. Net. Configuration. Builder, specifying its place in the order of layers the zero indexed layer below is the input layer, the number of input and output nodes, n. In and n. Out, as well as the type Dense. Layer. layer0,new. Dense. Layer. Builder. In7. 84. n. Out2. Native Spark Modeling feature has been released since SAP BusinessObjects Predictive Analytics version 2. This version supported Native Spark Modeling for. Use Azure Toolkit for IntelliJ to debug Spark applications remotely in HDInsight through VPN. Debug Spark applications locally or remotely on an HDInsight cluster with Azure Toolkit for IntelliJ through SSH. Once youve configured your net, you train the model with model. Prerequisites. You should have these installed to use this Quick. Winutils Exe Hadoop Download Windows' title='Winutils Exe Hadoop Download Windows' />Start guide. DL4. J targets professional Java developers who are familiar with production deployments, IDEs and automated build tools. Working with DL4. J will be easiest if you already have experience with these. If you are new to Java or unfamiliar with these tools, read the details below for help with installation and setup. Otherwise, skip to DL4. J Examples. If you dont have Java 1. Java Development Kit JDK here. To check if you have a compatible version of Java installed, use the following command Please make sure you have a 6. Bit version of java installed, as you will see an error telling you no jnind. Bit version instead. Maven is a dependency management and automated build tool for Java projects. Winutils Exe Hadoop Download Windows' title='Winutils Exe Hadoop Download Windows' /> It works well with IDEs such as Intelli. J and lets you install DL4. J project libraries easily. Install or update Maven to the latest release following their instructions for your system. To check if you have the most recent version of Maven installed, enter the following If you are working on a Mac, you can simply enter the following into the command line Maven is widely used among Java developers and its pretty much mandatory for working with DL4. Winutils Exe Hadoop Download Windows' title='Winutils Exe Hadoop Download Windows' />J. If you come from a different background and Maven is new to you, check out Apaches Maven overview and our introduction to Maven for non Java programmers, which includes some additional troubleshooting tips. Other build tools such as Ivy and Gradle can also work, but we support Maven best. An Integrated Development Environment IDE allows you to work with our API and configure neural networks in a few steps. We strongly recommend using Intelli. J, which communicates with Maven to handle dependencies. The community edition of Intelli. J is free. There are other popular IDEs such as Eclipse and Netbeans. Intelli. J is preferred, and using it will make finding help on Gitter Live Chat easier if you need it. Install the latest version of Git. Winutils Exe Hadoop Download Windows' title='Winutils Exe Hadoop Download Windows' />If you already have Git, you can update to the latest version using Git itself git clone git git. Use command line to enter the following git clone https github. Open Intelli. J and choose Import Project. Then select the main dl. Note that it is dl. OpenSource DeepLearning Software for Java and Scala on Hadoop and Spark. Choose Import project from external model and ensure that Maven is selected. Continue through the wizards options. Select the SDK that begins with jdk. You may need to click on a plus sign to see your options Then click Finish. Wait a moment for Intelli. J to download all the dependencies. Youll see the horizontal bar working on the lower right. Pick an example from the file tree on the left. Right click the file to run. Using DL4. J In Your Own Projects Configuring the POM. File. To run DL4. J in your own projects, we highly recommend using Maven for Java users, or a tool such as SBT for Scala. The basic set of dependencies and their versions are shown below. This includes deeplearning. CPU version of the ND4. J library that powers DL4. Remove Restrictions Tool Free Download Crack Autocad on this page. Jdatavec api Datavec is our library vectorizing and loading data. Every Maven project has a POM file. Here is how the POM file should appear when you run your examples. Within Intelli. J, you will need to choose the first Deeplearning. We suggest MLPClassifier. Linear, as you will almost immediately see the network classify two groups of data in our UI. The file on Github can be found here. To run the example, right click on it and select the green button in the drop down menu. You will see, in Intelli. Js bottom window, a series of scores. The rightmost number is the error score for the networks classifications. If your network is learning, then that number will decrease over time with each batch it processes. At the end, this window will tell you how accurate your neural network model has become In another window, a graph will appear, showing you how the multilayer perceptron MLP has classified the data in the example. It will look like this Congratulations You just trained your first neural network with Deeplearning. Now, why dont you try our next tutorial MNIST for Beginners, where youll learn how to classify images. Next Steps. Join us on Gitter. We have three big community channels. DL4. J Live Chat is the main channel for all things DL4. J. Most people hang out here. Tuning Help is for people just getting started with neural networks. Beginners please visit us here Early Adopters is for those who are helping us vet and improve the next release. WARNING This is for more experienced folks. Read the introduction to deep neural networks or one of our detailed tutorials. Check out the more detailed Comprehensive Setup Guide. Browse the DL4. J documentation. Python folks If you plan to run benchmarks on Deeplearning. Python framework x, please read these instructions on how to optimize heap space, garbage collection and ETL on the JVM. By following them, you will see at least a 1. Additional links. Troubleshooting. Q Im using a 6. Bit Java on Windows and still get the no jnind. A You may have incompatible DLLs on your PATH. To tell DL4. J to ignore those, you have to add the following as a VM parameter Run Edit Configurations VM Options in Intelli. J Q SPARK ISSUES I am running the examples and having issues with the Spark based examples such as distributed training or datavec transform options. A You may be missing some dependencies that Spark requires. See this Stack Overflow discussion for a discussion of potential dependency issues. Windows users may need the winutils. Hadoop. Download winutils. HADOOPHOMETroubleshooting Debugging Unsatisfied. Link. Error on Windows. Windows users might be seeing something like Exception in thread main java. Exception. In. Initializer. Error. at org. deeplearning. Neural. Net. ConfigurationBuilder. Neural. Net. Configuration. MNISTAnomaly. Example. MNISTAnomaly. Example. Caused by java. lang. Runtime. Exception org. Nd. 4j. BackendNo. Available. Backend. Configure Native Spark Modeling in SAP Business. Objects Predictive Analytics 3. Native Spark Modeling feature has been released since SAP Business. Objects Predictive Analytics version 2. This version supported Native Spark Modeling for classification scenarios. The latest release of SAP Business. Objects Predictive Analytics version 3. The business benefits gained from Native Spark Modelling are primarily able to train more models in shorter period of time, hence obtain better insights of the business challenges by learning from the predictive models and targeting the right customers very quickly. Native Spark Modeling is also known as IDBM In. Database Modeling, with this feature of SAP Business. Objects Predictive Analytics the model training and scoring can be pushed down to the Hadoop database level through Spark layer. Native Spark Modeling capability is delivered in Hadoop through a Scala program in Spark engine. In this blog you will get familiar with the end to end configuration of Native Spark Modeling on Hadoop using SAP Business. Objects Predictive Analytics. Lets review the configuration steps in detail below 1. Install SAP Business. Objects Predictive Analytics. According to your deployment choice, install either the desktop or the clientserver mode. Refer to the steps mentioned in the installation overview link or installation guides Install PA section installation. During installation, all the required configuration files and the pre delivered packages for Native Spark Modeling will be installed in the local desktop or server location. Check SAP Business. Objects Predictive Analytics installation. In this scenario, SAP Business. Objects Predictive Analytics server has been chosen as deployment option and it will be installed in on a Windows server. After the successful installation of SAP Business. Objects Predictive Analytics server, within Windows server local directory you will be able to see the folder structure as below. As SAP Business. Objects Predictive Analytics 3. Server is installed, on the Windows server navigate into the SAP Predictive AnalyticsServer 3. You will see the folder Spark. Connector which contains all the required configuration files and the developed Native Spark Modeling functionality in form of jar f iles. Click on the Spark. Connector folder to check the following directory structure. The below folder structure will show up. Check whether the winutils. Apache Spark requires the executable file winutils. Windows Operating System when running against a non Windows cluster. Check the required client configuration xml files in hadoop. Config folder. Create a sub folder for each Hive ODBC DSN. For example, in this scenario the sub folder is named IDBMHIVEDUBCLOUDERA. Note This is not a fixed name, you can name it according to your preference. Each subfolder should contain the 3 Hadoop client XML configuration files for the cluster core site. Download client configuration xml files. You can use admin tools such as Hortonworks Ambari or Cloudera Manager to download these files. Note This sub folder is linked to the Hive ODBC DSN by the Spark. Connections. ini file property Hadoop. Config. Dir, not by the subfolder name. Download required Spark version jar in the folder Jars Download the additional assembly jar files from the link below and copy them into the Spark. ConnectorJars folder. Configure Spark. cfg for client server server mode or KJWizardjni. As SAP Business. Objects Predictive Analytics server is installed here, within the Server 3. Spark. cfg file in Notepad or any other text editors. Native Spark Modeling supports both Spark versions offered by two major Hadoop enterprise vendors at present day Cloudera and Hortonworks. As the Cloudera Hadoop server is being used in this scenario, you should keep the configuration path of Spark version 1. Cloudera server active in the Spark. Hortonworks servers Spark version. Also path to connection folders and some tuning options can be set here. Navigate to folder location C Program FilesSAP Predictive AnalyticsServer 3. Spark. Connector and edit Spark. For Desktop the file location Navigate to the folder location C Program FilesSAP Predictive AnalyticsServer 3. EXEClientsKJWizard. JNI and edit KJWizard. JNI. ini file. 7. Set up Model Training Delegation for Native Spark Modeling In Automated Analytics Menu, navigate to the following path. File Preferences Model Training Delegation. By default the Native Spark Modeling when possible flag should be SWITCHED ON, if it is not, please ensure it is SWITCHED ON. Then press OK button. Create an ODBC connection to Hive Server as a data source for Native Spark Modeling. This connection will be later used in Automated Analytics to select Analytic Data Source ADS or Hive tables as input data source for the Native Spark modeling. Open the Windows ODBC Data Source Administrator. In the User DSN tab press Add. Select the Data. Direct 7. SP5 Apache Hive Wire Protocol from the driver list and press Finish. In the General tab enter Data Source Name IDBMHIVEDUBCLOUDERA This is just an example no fixed name is compulsory for this to workHost Name xxxxx. Port. Number 1. 00. Database Name default. In the Security tab set Authentication Method to 0 User IDPassword and set the User Name and password. SWITCH ON the flag Use Native Catalog Functions. Select Use Native Catalog Functions to disable the SQL Connector feature and allow the driver to execute Hive. QL directly. Press the Test Connect button. If the connection is successful, press APPLY and then OK. If the connection test fails even when the connection information is correct, please make sure that the Hive Thrift server is running. Set up the Spark. Connection. ini file for your individual ODBC DSNThis file contains Spark connection entries, specific for each particular Hive data source name DSN. For example, in the case that there are 3 Hive ODBC DSNs, the user has a flexibility to say two should run on IDBM and not the last one i. DSNs not present in Spark. Connection. ini file will fall back to normal modelling process using Automated Analytics engine. To set the required configuration parameters for Native Spark Modeling, navigate to the SAPBusiness. Objects Predictive Analytics 3. DesktopServer installation folder in case of server go to folder location C Program FilesSAP Predictive AnalyticsServer 3. Spark. Connector OR in the case of a Desktop installation, go to the folder location C Program FilesSAP Predictive AnalyticsDesktop 3. AutomatedSpark. Connector and edit the Spark. Connections. ini file then save it. As in this scenario a Cloudera Hadoop box is being used you need to set the parameters in the file as per the configuration requirement of Cloudera clusters. For Cloudera Clusters To enable Native Spark Modeling against a Hive data source, you need to define at least the below minimum properties. Each entry after Spark. Connection needs to match exactly the Hive ODBC DSN Data Source Name. Upload the spark 1. HDFS and reference the HDFS location. Spark. Connection. IDBMHIVEDUBCLOUDERA. Set hadoop. Config. Dir and hadoop. User. Name, as they are mandatory. There are two mandatory parameters that have to be set for each DSN hadoop. Config. Dir The directory of the core site. DSN Use relative paths to the Hadoop client XML config files yarn site. For e. g. Spark. Connection. IDBMHIVEDUBCLOUDERA.

It works well with IDEs such as Intelli. J and lets you install DL4. J project libraries easily. Install or update Maven to the latest release following their instructions for your system. To check if you have the most recent version of Maven installed, enter the following If you are working on a Mac, you can simply enter the following into the command line Maven is widely used among Java developers and its pretty much mandatory for working with DL4. Winutils Exe Hadoop Download Windows' title='Winutils Exe Hadoop Download Windows' />J. If you come from a different background and Maven is new to you, check out Apaches Maven overview and our introduction to Maven for non Java programmers, which includes some additional troubleshooting tips. Other build tools such as Ivy and Gradle can also work, but we support Maven best. An Integrated Development Environment IDE allows you to work with our API and configure neural networks in a few steps. We strongly recommend using Intelli. J, which communicates with Maven to handle dependencies. The community edition of Intelli. J is free. There are other popular IDEs such as Eclipse and Netbeans. Intelli. J is preferred, and using it will make finding help on Gitter Live Chat easier if you need it. Install the latest version of Git. Winutils Exe Hadoop Download Windows' title='Winutils Exe Hadoop Download Windows' />If you already have Git, you can update to the latest version using Git itself git clone git git. Use command line to enter the following git clone https github. Open Intelli. J and choose Import Project. Then select the main dl. Note that it is dl. OpenSource DeepLearning Software for Java and Scala on Hadoop and Spark. Choose Import project from external model and ensure that Maven is selected. Continue through the wizards options. Select the SDK that begins with jdk. You may need to click on a plus sign to see your options Then click Finish. Wait a moment for Intelli. J to download all the dependencies. Youll see the horizontal bar working on the lower right. Pick an example from the file tree on the left. Right click the file to run. Using DL4. J In Your Own Projects Configuring the POM. File. To run DL4. J in your own projects, we highly recommend using Maven for Java users, or a tool such as SBT for Scala. The basic set of dependencies and their versions are shown below. This includes deeplearning. CPU version of the ND4. J library that powers DL4. Remove Restrictions Tool Free Download Crack Autocad on this page. Jdatavec api Datavec is our library vectorizing and loading data. Every Maven project has a POM file. Here is how the POM file should appear when you run your examples. Within Intelli. J, you will need to choose the first Deeplearning. We suggest MLPClassifier. Linear, as you will almost immediately see the network classify two groups of data in our UI. The file on Github can be found here. To run the example, right click on it and select the green button in the drop down menu. You will see, in Intelli. Js bottom window, a series of scores. The rightmost number is the error score for the networks classifications. If your network is learning, then that number will decrease over time with each batch it processes. At the end, this window will tell you how accurate your neural network model has become In another window, a graph will appear, showing you how the multilayer perceptron MLP has classified the data in the example. It will look like this Congratulations You just trained your first neural network with Deeplearning. Now, why dont you try our next tutorial MNIST for Beginners, where youll learn how to classify images. Next Steps. Join us on Gitter. We have three big community channels. DL4. J Live Chat is the main channel for all things DL4. J. Most people hang out here. Tuning Help is for people just getting started with neural networks. Beginners please visit us here Early Adopters is for those who are helping us vet and improve the next release. WARNING This is for more experienced folks. Read the introduction to deep neural networks or one of our detailed tutorials. Check out the more detailed Comprehensive Setup Guide. Browse the DL4. J documentation. Python folks If you plan to run benchmarks on Deeplearning. Python framework x, please read these instructions on how to optimize heap space, garbage collection and ETL on the JVM. By following them, you will see at least a 1. Additional links. Troubleshooting. Q Im using a 6. Bit Java on Windows and still get the no jnind. A You may have incompatible DLLs on your PATH. To tell DL4. J to ignore those, you have to add the following as a VM parameter Run Edit Configurations VM Options in Intelli. J Q SPARK ISSUES I am running the examples and having issues with the Spark based examples such as distributed training or datavec transform options. A You may be missing some dependencies that Spark requires. See this Stack Overflow discussion for a discussion of potential dependency issues. Windows users may need the winutils. Hadoop. Download winutils. HADOOPHOMETroubleshooting Debugging Unsatisfied. Link. Error on Windows. Windows users might be seeing something like Exception in thread main java. Exception. In. Initializer. Error. at org. deeplearning. Neural. Net. ConfigurationBuilder. Neural. Net. Configuration. MNISTAnomaly. Example. MNISTAnomaly. Example. Caused by java. lang. Runtime. Exception org. Nd. 4j. BackendNo. Available. Backend. Configure Native Spark Modeling in SAP Business. Objects Predictive Analytics 3. Native Spark Modeling feature has been released since SAP Business. Objects Predictive Analytics version 2. This version supported Native Spark Modeling for classification scenarios. The latest release of SAP Business. Objects Predictive Analytics version 3. The business benefits gained from Native Spark Modelling are primarily able to train more models in shorter period of time, hence obtain better insights of the business challenges by learning from the predictive models and targeting the right customers very quickly. Native Spark Modeling is also known as IDBM In. Database Modeling, with this feature of SAP Business. Objects Predictive Analytics the model training and scoring can be pushed down to the Hadoop database level through Spark layer. Native Spark Modeling capability is delivered in Hadoop through a Scala program in Spark engine. In this blog you will get familiar with the end to end configuration of Native Spark Modeling on Hadoop using SAP Business. Objects Predictive Analytics. Lets review the configuration steps in detail below 1. Install SAP Business. Objects Predictive Analytics. According to your deployment choice, install either the desktop or the clientserver mode. Refer to the steps mentioned in the installation overview link or installation guides Install PA section installation. During installation, all the required configuration files and the pre delivered packages for Native Spark Modeling will be installed in the local desktop or server location. Check SAP Business. Objects Predictive Analytics installation. In this scenario, SAP Business. Objects Predictive Analytics server has been chosen as deployment option and it will be installed in on a Windows server. After the successful installation of SAP Business. Objects Predictive Analytics server, within Windows server local directory you will be able to see the folder structure as below. As SAP Business. Objects Predictive Analytics 3. Server is installed, on the Windows server navigate into the SAP Predictive AnalyticsServer 3. You will see the folder Spark. Connector which contains all the required configuration files and the developed Native Spark Modeling functionality in form of jar f iles. Click on the Spark. Connector folder to check the following directory structure. The below folder structure will show up. Check whether the winutils. Apache Spark requires the executable file winutils. Windows Operating System when running against a non Windows cluster. Check the required client configuration xml files in hadoop. Config folder. Create a sub folder for each Hive ODBC DSN. For example, in this scenario the sub folder is named IDBMHIVEDUBCLOUDERA. Note This is not a fixed name, you can name it according to your preference. Each subfolder should contain the 3 Hadoop client XML configuration files for the cluster core site. Download client configuration xml files. You can use admin tools such as Hortonworks Ambari or Cloudera Manager to download these files. Note This sub folder is linked to the Hive ODBC DSN by the Spark. Connections. ini file property Hadoop. Config. Dir, not by the subfolder name. Download required Spark version jar in the folder Jars Download the additional assembly jar files from the link below and copy them into the Spark. ConnectorJars folder. Configure Spark. cfg for client server server mode or KJWizardjni. As SAP Business. Objects Predictive Analytics server is installed here, within the Server 3. Spark. cfg file in Notepad or any other text editors. Native Spark Modeling supports both Spark versions offered by two major Hadoop enterprise vendors at present day Cloudera and Hortonworks. As the Cloudera Hadoop server is being used in this scenario, you should keep the configuration path of Spark version 1. Cloudera server active in the Spark. Hortonworks servers Spark version. Also path to connection folders and some tuning options can be set here. Navigate to folder location C Program FilesSAP Predictive AnalyticsServer 3. Spark. Connector and edit Spark. For Desktop the file location Navigate to the folder location C Program FilesSAP Predictive AnalyticsServer 3. EXEClientsKJWizard. JNI and edit KJWizard. JNI. ini file. 7. Set up Model Training Delegation for Native Spark Modeling In Automated Analytics Menu, navigate to the following path. File Preferences Model Training Delegation. By default the Native Spark Modeling when possible flag should be SWITCHED ON, if it is not, please ensure it is SWITCHED ON. Then press OK button. Create an ODBC connection to Hive Server as a data source for Native Spark Modeling. This connection will be later used in Automated Analytics to select Analytic Data Source ADS or Hive tables as input data source for the Native Spark modeling. Open the Windows ODBC Data Source Administrator. In the User DSN tab press Add. Select the Data. Direct 7. SP5 Apache Hive Wire Protocol from the driver list and press Finish. In the General tab enter Data Source Name IDBMHIVEDUBCLOUDERA This is just an example no fixed name is compulsory for this to workHost Name xxxxx. Port. Number 1. 00. Database Name default. In the Security tab set Authentication Method to 0 User IDPassword and set the User Name and password. SWITCH ON the flag Use Native Catalog Functions. Select Use Native Catalog Functions to disable the SQL Connector feature and allow the driver to execute Hive. QL directly. Press the Test Connect button. If the connection is successful, press APPLY and then OK. If the connection test fails even when the connection information is correct, please make sure that the Hive Thrift server is running. Set up the Spark. Connection. ini file for your individual ODBC DSNThis file contains Spark connection entries, specific for each particular Hive data source name DSN. For example, in the case that there are 3 Hive ODBC DSNs, the user has a flexibility to say two should run on IDBM and not the last one i. DSNs not present in Spark. Connection. ini file will fall back to normal modelling process using Automated Analytics engine. To set the required configuration parameters for Native Spark Modeling, navigate to the SAPBusiness. Objects Predictive Analytics 3. DesktopServer installation folder in case of server go to folder location C Program FilesSAP Predictive AnalyticsServer 3. Spark. Connector OR in the case of a Desktop installation, go to the folder location C Program FilesSAP Predictive AnalyticsDesktop 3. AutomatedSpark. Connector and edit the Spark. Connections. ini file then save it. As in this scenario a Cloudera Hadoop box is being used you need to set the parameters in the file as per the configuration requirement of Cloudera clusters. For Cloudera Clusters To enable Native Spark Modeling against a Hive data source, you need to define at least the below minimum properties. Each entry after Spark. Connection needs to match exactly the Hive ODBC DSN Data Source Name. Upload the spark 1. HDFS and reference the HDFS location. Spark. Connection. IDBMHIVEDUBCLOUDERA. Set hadoop. Config. Dir and hadoop. User. Name, as they are mandatory. There are two mandatory parameters that have to be set for each DSN hadoop. Config. Dir The directory of the core site. DSN Use relative paths to the Hadoop client XML config files yarn site. For e. g. Spark. Connection. IDBMHIVEDUBCLOUDERA.